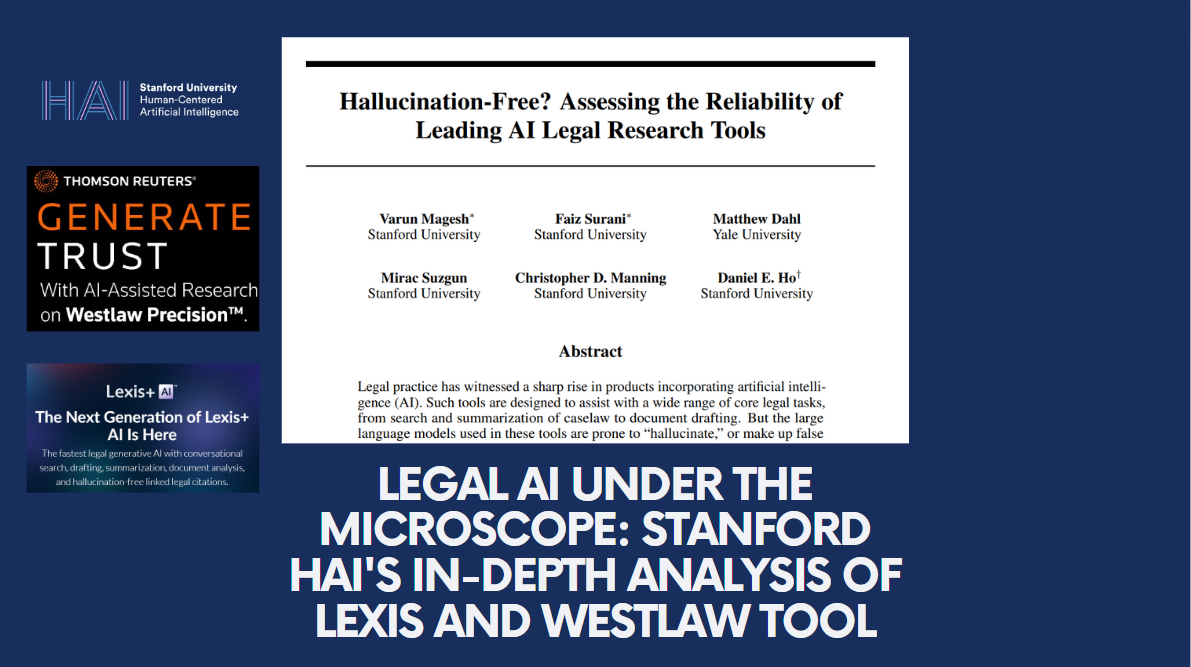

Reflections on the Stanford HAI Report on Legal AI Research Tools

The updated paper from the Stanford HAI team presents rigorous and insightful research into the performance of generative AI tools for legal research. The complexity of legal research is well-acknowledged, and this study looks into the capabilities and limitations of AI-driven legal research tools like Lexis+ AI and Westlaw AI-Assisted Research.

Stanford and Unclean Hands

This is Stanford Human-Centered Artificial Intelligence (HAI) team’s second bite at this apple. The first version of this report was criticized by me and others for using Thomson Reuters’ Practical Law AI tool as a legal research tool instead of using Westlaw Precision AI (which they call Westlaw AI-AR in their report.) In the follow up report, and in the blog post and on LinkedIn posts, authors of the report made it sound like Thomson Reuters had some nefarious reason for this. In reality, TR had not released the AI tool to any academic institutions, not just the Stanford group.

In the original report, this should have been posted up front and center on the paper, instead it was finally mentioned on page 9. It appears to me that Stanford HAI released the initial report in an attempt to pressure TR to give them access, which did work. However, this type of maneuver from Stanford HAI may have repercussions on whether the legal industry gives them a second chance to take this report seriously. We expect a high degree of ethics from prestigious instructions like Stanford, and in my opinion, this team of researchers did not meet those expectations in the initial report.

Challenges in Legal Research

Legal research is inherently challenging due to the intricate and nuanced nature of legal language and the vast array of case law, statutes, and regulations that must be navigated. The Stanford researchers designed their study with prompts that often included false statements or were worded in ways that tested the AI tools’ ability to understand legal complexities. This approach, while insightful, introduces a certain bias aimed at identifying the tools’ vulnerabilities. I would not say that they cherry-picked the results for the paper, but that the questions posed were designed to challenge the systems they were testing. This is not a bad thing, just something to consider when reading the report.

AI Tools: Strengths and Weaknesses

One of the primary advantages and disadvantages of legal research generative AI tools is their attempt to provide direct answers to user prompts. Traditional legal research tools like Westlaw and Lexis previously operated by presenting users with a list of relevant cases, statutes, and regulations based on Boolean or natural language searches. The shift to AI-generated responses aims to streamline this process but comes with its own set of challenges.

For instance, Westlaw’s Precision AI (Westlaw AI-AR) often produces lengthy answers, which can be a double-edged sword. While these detailed responses can be informative, they also increase the risk of including inaccuracies or “hallucinations.” The study highlights that these AI tools sometimes produce errors, raising questions about the effectiveness of their initial vector search compared to traditional Boolean and natural language searches. The researchers gave the results a Correct/Incorrect/Refusal score. To be correct, the AI generated answer had to be 100% correct. Any inaccurate information automatically resulted in an incorrect score. If the results had five points of reasoning, and only one of those was factually incorrect or hallucinated, the entire question was ruled incorrect. Something else to keep in mind when looking at the statistics.

Comparing AI and Traditional Searches

A key point of interest is how AI search results for statutes, regulations, and cases compare with traditional search methods. The study suggests that while the AI tools’ vector search technology is promising in retrieving relevant documents, the accuracy of the AI-generated responses is still a concern. Users must still verify the AI’s answers against the actual documents, much like traditional legal research. As my friend Tony Thai from Hyperdraft would jokingly say, “I mean we could just do the regular research and I don’t know…READ like we are paid to do.”

The Role of Vendors and the Future of Legal AI

Vendors like Westlaw and Lexis need to take this report seriously. The previous version of the study had its limitations, but the updated report addresses many of these deficiencies, providing a more comprehensive evaluation of the AI tools. While vendors may point out that the study is based on earlier versions of their products, the fundamental issues highlighted remain relevant.

The legal community has not abandoned these AI tools despite their flaws, primarily due to the decades of goodwill these vendors have built. The industry understands that this technology is still in its infancy. With the exception of CoCounsel, which was not directly evaluated in this study, these products have not reached their first birthday yet. This is very new technology which vendors and customers are wanting to get released quickly, and fix issues along the way. Vendors have the opportunity to engage in honest conversations with their customers about how they plan to improve these tools and reduce hallucinations. Vendors need to respect the customers and be forthcoming with issues they are working to correct. Creative advertising may create some short-term wins but may damage long-term relationships. Vendors are bending themselves into pretzels to advertise some version of “hallucination-free” on websites and marketing media when everyone knows that hallucinations in Generative AI tools is a feature, not a bug.

RAG as a Stop-Gap Measure

The study underscores that Retrieval-Augmented Generation (RAG) is a temporary solution. Something I have discussed with the vendors for a while now. For generative AI tools to truly excel, users need more sophisticated ways to manipulate their searches, refine retrieval processes, and interact with the AI. Currently, the system is basic and limited, but there is optimism that it will improve with time and consumer pressure. RAG is one of the first steps toward improving AI generated results for legal research, not the last.

Personal Reflections and Industry Implications

Reflecting on the broader implications, it is clear that the AI tools in their current form are not yet replacements for thorough, human-conducted legal research. The tools offer significant promise but must evolve to become more reliable and versatile. Legal professionals must remain vigilant in verifying AI-generated outputs and continue to advocate for advancements that will make these tools more robust and dependable. We are right in the middle of the Trough of Disillusionment on the Gartner Hype Cycle. This means that customers are expecting solid results, and while there is this unusual honeymoon with the legal industry when it comes to forgiving AI tools for hallucinations, this honeymoon will not last much longer. Lawyers are notorious for trying a product once, and if it works, they stick with it, and if it doesn’t, it may be months or years before they are willing to try it again. We are almost at that point in the industry with Generative AI tools that don’t reach their advertised abilities.

While the Stanford HAI report sheds light on the current state of legal AI research tools, it also provides a roadmap for future improvements. The legal community must work collaboratively with vendors to refine these Generative AI technologies, ensuring they become valuable assets in the practice of law.